Since social media became the place teenagers live – where friendships run, trends land, and identity gets tested in public – the question hasn’t been if it leaves a mark, but how deep that mark goes. Australia is now trying to draw a hard line, becoming the first country to roll out a nationwide minimum-age rule that blocks anyone under 16 from maintaining accounts across a slate of major platforms. The policy took effect on 10 December 2025, shifting responsibility onto the companies themselves – not kids or parents – to keep minors off their services.

The reasoning is straightforward: years of mounting concern, backed by research, around heavy use being linked to anxiety, low mood, disrupted sleep, and the more serious risks that come with open platforms – cyberbullying, predatory behaviour, and exposure to harmful content. What’s striking is how uncompromising the move is. The list includes Instagram, Facebook, Threads, Snapchat, TikTok, YouTube, X, Reddit, Twitch and Kick, and platforms face penalties of up to AUD$49.5 million if they fail to take steps to prevent under-16 accounts.

Still, telling a generation to ‘just stop’ isn’t a policy detail – it’s a rewiring of daily life. That’s why the rollout is being watched so closely: it forces a new era of age-checking and enforcement experiments, while also prompting the predictable ripple effect of teens scanning for loopholes and finding new places for the group chat to continue. The bigger question is less about the headline, and more about the outcome: if you shut one door on the internet, do you meaningfully reduce harm – or simply push it into places that are harder to see?

Read More: Sober Curiosity – How Gen Z Is Redefining Drinking Culture

Could It Work – And Should It?

Officials are positioning the ban as a protective measure – a way to pull a younger generation back from addictive product design, relentless comparison loops, and exposure to content they’re not developmentally equipped to filter. In their telling, it’s a public-health intervention disguised as tech policy: rather than asking families to police every device, the law places the burden on platforms to prevent under-16s from holding accounts in the first place – and to identify and close existing under-16 accounts too.

That’s also where the criticism lands. Opponents argue enforcement at scale is notoriously hard, and any system robust enough to verify age starts raising uncomfortable questions about privacy and data collection. Civil liberties groups – and some child-rights advocates – warn the ban could produce a darker side effect: cut teens off from the biggest, most visible platforms, and you don’t erase their online lives; you may push them into smaller, less regulated spaces where oversight is thinner and harm is harder to detect.

The Rules Of Enforcement – And How Kids Are Dodging It

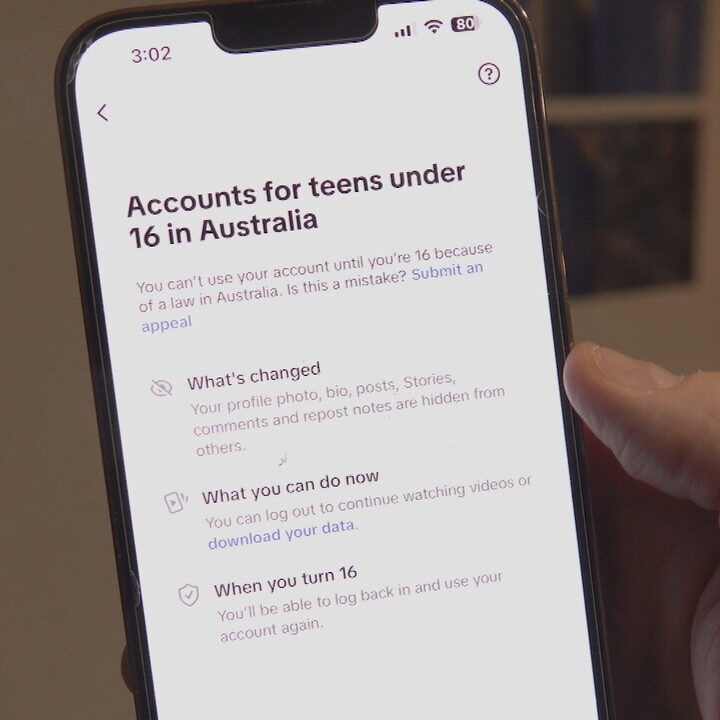

As 2026 rolls in, the real story is the mechanics. Regulators aren’t interested in the enter-your-birthday routine; platforms are being pushed to adopt stronger age checks, and the penalty for getting it wrong isn’t a slap on the wrist. The expectation is twofold: tighten the front door, and also remove under-16 accounts already inside. Meta has begun flagging suspected under-16 users and warning them about the new rule, with shutdowns beginning to follow. YouTube is expected to lean on broader Google account signals rather than treating it as a standalone app problem. Others are still finalising their processes – but the timeline matters, because once enforcement hardens, non-compliant platforms can be hit with multi-million-dollar fines.

In theory, age assurance is solvable: ID checks, selfie-based age estimation, app store or device cross-checks, and stricter default settings. In practice, teens are already adapting – adjusting profiles to appear older, setting up back-ups, using VPNs, and drifting toward alternative apps. Early enforcement, then, looks less like a clean lockout and more like a transition phase: some get blocked, some migrate, and some slip through with accounts engineered to look legitimate.

Why Everyone Is Watching

Australia has become the test case. If the model holds – whether through measurable harm reduction, reduced time online, or sustained public backing – it could give lawmakers elsewhere the confidence to follow, turning Australia into a blueprint rather than an outlier. That would force Big Tech toward heavier verification, redesigned onboarding, and a rethink of engagement systems built to maximise attention – or toward accepting that more governments may decide the safest young user is the one who never signs up at all.

Prime Minister Anthony Albanese has framed it like minimum drinking-age laws: even if some find ways around the rule, drawing a clear line can still reset norms – and give society something concrete to enforce.

Born in Korea and raised in Hong Kong, Min Ji has combined her degree in anthropology and creative writing with her passion for going on unsolicited tangents as an editor at Friday Club. In between watching an endless amount of movies, she enjoys trying new cocktails and pastas while occasionally snapping a few pictures.